Your Metrics Are Lying: How to Manage the Impact of Bot Traffic on Your Data

It's estimated that around 40% of all internet traffic is generated by bots, according to Cloudflare’s “Radar Report.” For those of us in marketing and data analysis, this is a big deal—bot traffic can skew our reports and lead us to trust inaccurate metrics, often without us even knowing. With data, it’s hard enough to make sure everything is being collected properly; but with traffic bots, how can you get real insights?

As a response to this issue, the most popular digital analytics tools have started to offer bot filtering features. While it is advisable to activate them, they have shown very low effectiveness against the different types of bots that abound on the web; or worse, it could filter out real, and beneficial bot traffic, skewing data in favor of inaccurate traffic.

Bottom line, our data is at risk.

How does bot traffic impact your business?

For companies making business decisions based on data, bot traffic can have detrimental consequences on their digital strategies. It skews various metrics like conversion rates, bounce rates, total users, and sessions, leading to unexplained fluctuations. Additionally, increased traffic can raise costs for digital analysis tools, as many pricing models are based on the number of visits. AI tools and implementations that train on data impacted by traffic bots can produce inaccurate insights. Bot traffic can also bog down websites by overloading servers, resulting in slow page load times or, in severe cases, making the site inaccessible to users. In extreme situations, allowing unwanted traffic can create security vulnerabilities and lead to leaks of sensitive information.

Recently, one of our clients asked us for assistance in reviewing certain sudden increases in traffic originating from Frankfurt during the early hours of the morning, which did not align with their historical data. After analyzing the reports and cross-referencing the different available dimensions, we discovered that, during certain periods, 90% of the total users recorded in the reports exhibited behavior that was difficult to attribute to humans. This not only seriously affected the data quality but also incurred significant expenses due to the volume of visits the website was receiving.

However, it’s not just extreme situations that can affect our data quality. Even a small percentage of anomalies can lead to unreliable reports. So, how can we stop this and keep our data reliable?

Know the enemy

The first step to effectively counteract bots is to understand them. Not all bots are alike; each type demands a unique strategy. A common classification distinguishes between malicious and non-malicious bots. Let’s examine some typical examples of malicious ones.

Types of malicious traffic bots

1. Scalper bots:

These programs snap up tickets and other limited-availability goods at lightning speed, only to resell them later at higher prices.

2. Spam bots:

Designed to flood your inbox or messages with junk, often laden with malicious links. Who hasn't been on the receiving end of annoying spam?

3. Scraper bots:

These bots automatically extract data from websites, often copying content from competitors to gain an edge.

On the other hand, non-malicious bots are the ones that can quickly handle tedious tasks. They gather large amounts of data that would otherwise take days or even months to retrieve, easing the burden on humans for repetitive tasks.

Types of beneficial traffic bots

1. Spider (web crawler):

Google's bots are some of the most advanced. They relentlessly search the web for videos, images, text, links, and more. Without these crawlers, websites wouldn't get any organic search traffic.

2. Backlink checkers:

These tools help you find all the links a website or page receives from other sites. They’re crucial for SEO.

3. Website monitoring bots:

These bots watch over websites and can alert the owner if, for instance, the site is under attack by hackers or goes offline.

My goal isn’t to exhaustively detail every type of bot out there, as they are constantly evolving. Instead, I want to highlight the various behaviors that influence our filtering and removal strategies, as well as the complexity involved. In the end, whether they are good or bad, all bots are unwanted in our reports, and we need to minimize their impact on our data.

Countering bot attacks with the right tools

Nowadays, you can find both automated and manual strategies to tackle this challenge. In the case of automated solutions, bot filtering programs stand out, either integrated into analytical tools or specialized software for AI-driven bot detection. However, as mentioned earlier, their effectiveness tends to be low, and in many cases, they come with associated costs.

On the other hand, we have non-automated solutions that provide better results, and we can categorize them based on the filtering approach they adopt:

Reactive Approach: Apply custom filters at the report level. This method is simple and flexible, requiring no development-level changes. It's an effective first step for early detection. Utilizing tools available in analytics platforms—like GA4 segments, Looker Studio filters and data warehouse queries—makes it easy to implement, though it’s less robust.

Preventive Approach: Implement filters before collecting data. Although this can be challenging and resource-intensive, it effectively prevents the impact on reporting and restricts bots from accessing the website and its servers.

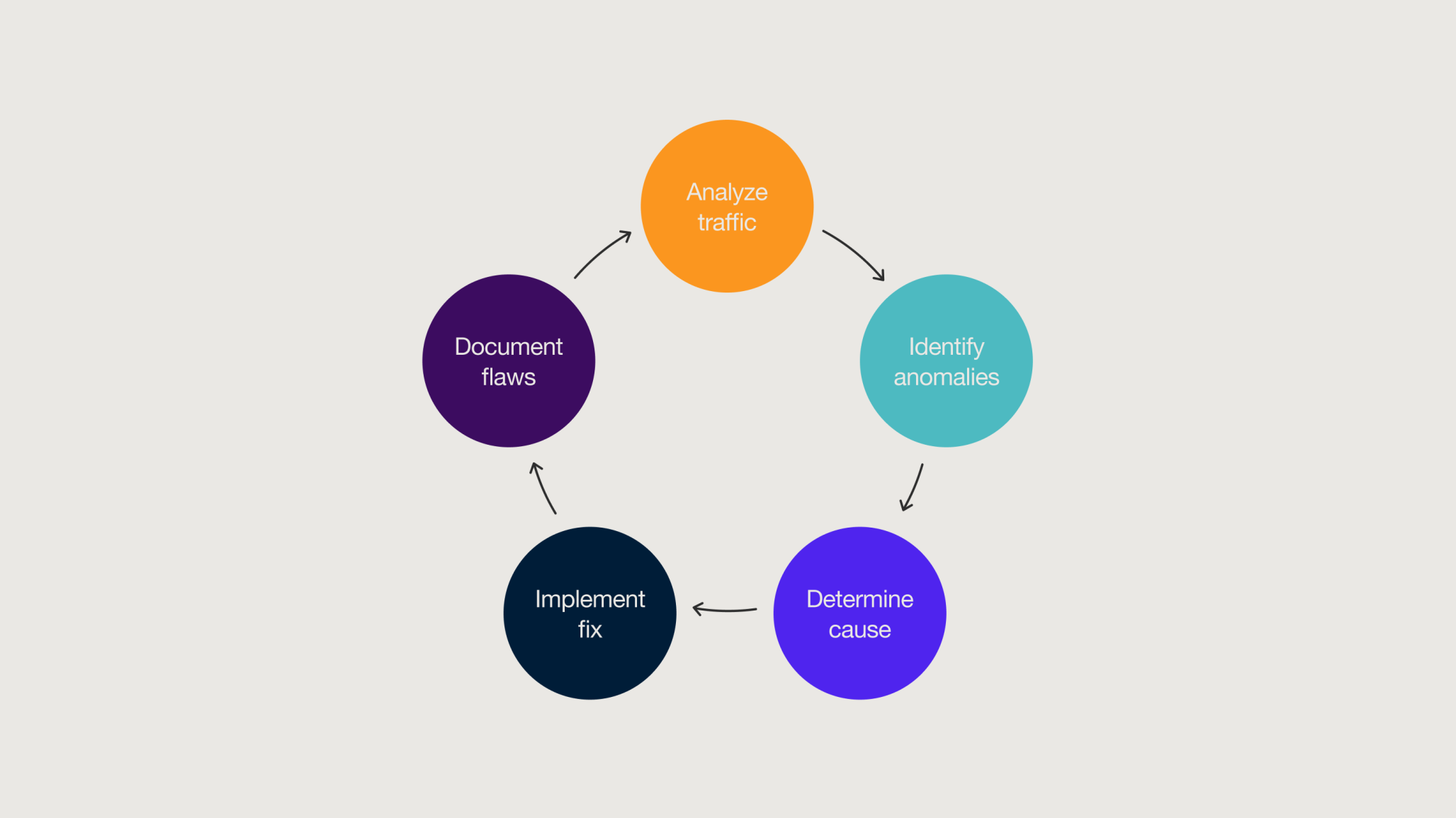

Establishing a data quality review cycle

To keep our data free from bot traffic and ensure optimal results, it’s best to use a comprehensive strategy combining both preventive and reactive measures. This is known as the data quality review cycle, a model of continuous monitoring designed to constantly detect anomalies. It involves collaborative efforts from analysts, developers, and product owners to find efficient solutions that safeguard the integrity and reliability of the data.

Although we can’t entirely eliminate bot traffic from our reports, proactively implementing data quality review strategies offers us practical and effective ways to address this issue.

In summary

- Bots can serve both harmful and benign purposes; in both cases, it's crucial to keep them out of reports.

- Bot traffic has negative consequences for both digital and commercial strategies.

- While analytics platforms have features that automatically block some bot traffic, their effectiveness is limited.

- Constantly monitoring anomalies in the reports is essential for identifying bot traffic.

- To avoid unwanted traffic influence and ensure that the data is not biased or contaminated, it’s necessary to implement both preventive and reactive measures.

- Including a data quality review cycle in the workflow is crucial for keeping the reports free from bot traffic.

Related

Thinking

-

![Amplitude Global Solutions Partner Monks announcement banner and badge]()

Blog post

Driving Experimentation and AI Innovation with Amplitude By Sayf Sharif 5 min read -

![Monks and Hightouch partner on CDP and AI]()

Blog post

Monks and Hightouch Forge a New Partnership for Data-Driven Marketing and AI in APAC By Peter Luu 3 min read -

![A vibrant, flowing wave of translucent material reflecting colorful lights in shades of pink, blue, and purple. The background features a soft bokeh effect with blurred light sources, enhancing the ethereal and dynamic feel of the scene.]()

Blog post

Achieve Smarter Marketing with Salesforce Marketing Intelligence By Ashley Musumeci 4 min read

Sharpen your edge in a world that won't wait

Sign up to get email updates with actionable insights, cutting-edge research and proven strategies.

Monks needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.